Gunnar

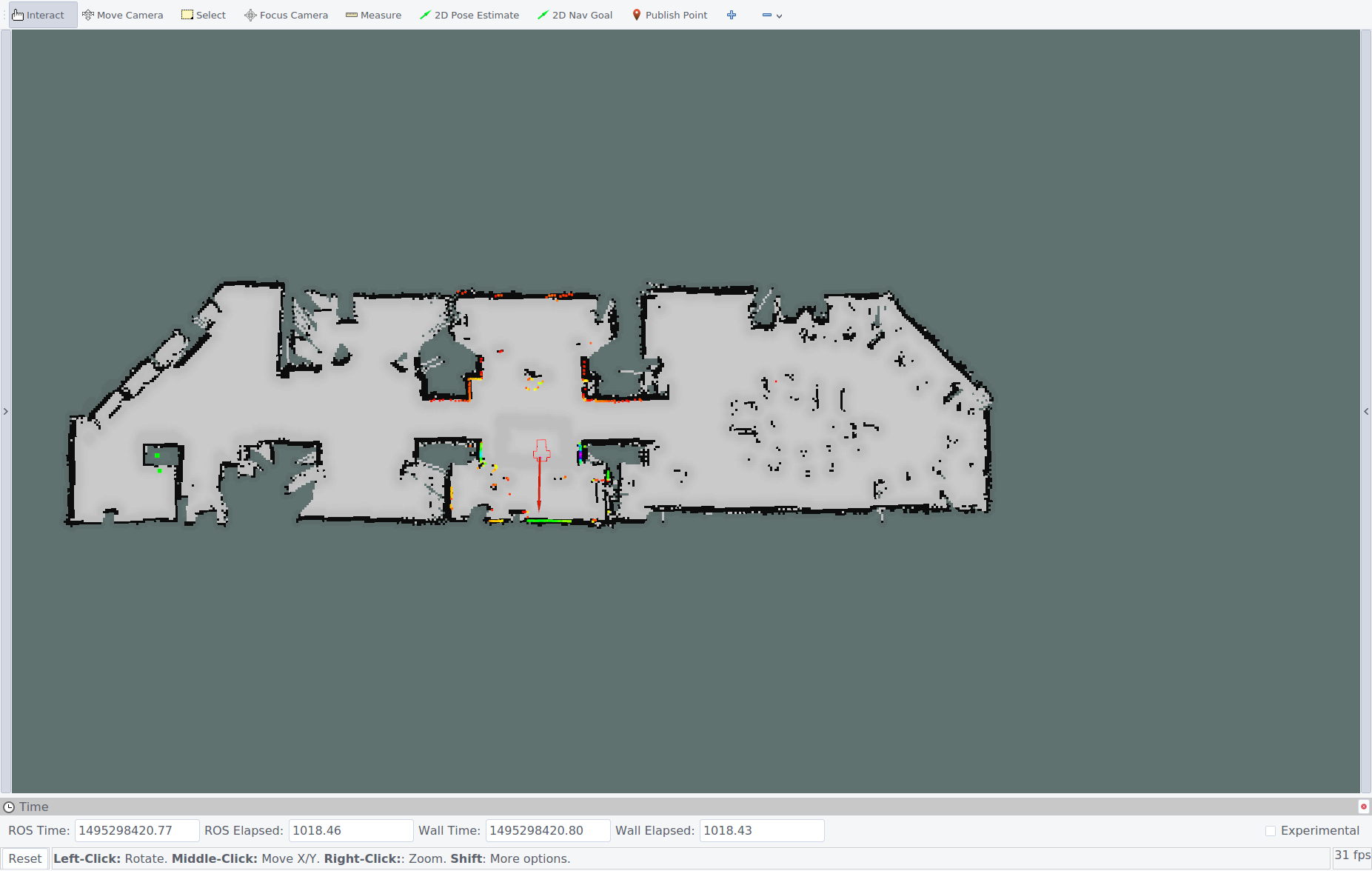

Gunnar and a sample map generated with Gmapping.

Gunnar is a differential-drive rover with LIDAR as its primary sense. It uses Robot Operating System (ROS) to provide messaging between components, and important algorithms such as particle filter simultaneous localization and (grid) mapping (SLAM).

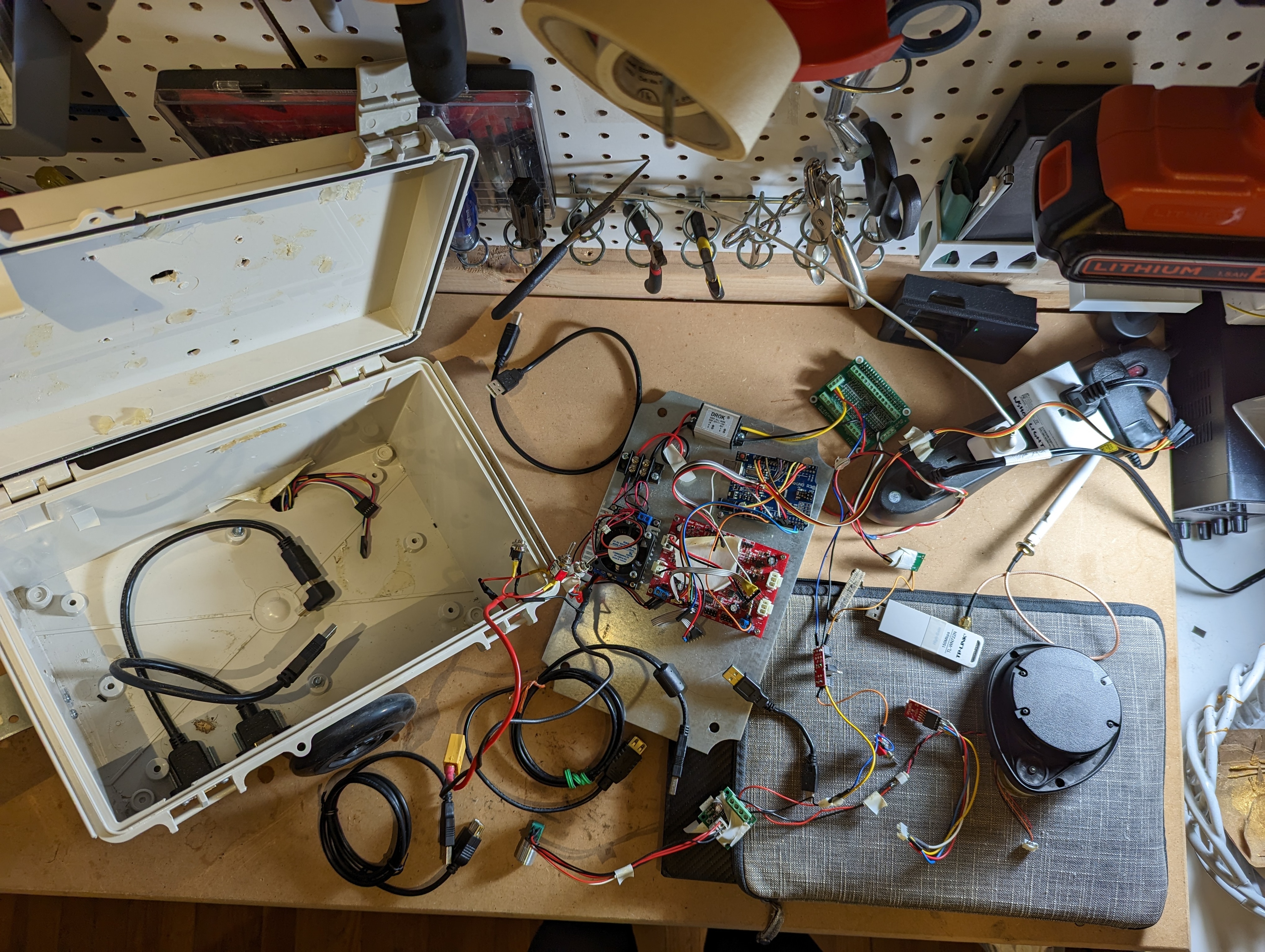

Real-time motor speed control and Hall-effect quadrature integration is performed by an Arduino Uno. This accepts speed targets and reports encoder counts to a Raspberry Pi 3B over USB.

The Pi runs ROS Indigo, with nodes for communication with the Arduino and for reading a serial bytestream encoding laser scans measured by a LIDAR unit extracted from a Neato XV-11 robotic vacuum cleaner.

The Pi itself connects over OpenVPN to a roscore running on an Ubuntu workstation powered by an Intel i7-4770K CPU. This workstation runs ROS nodes for SLAM (via gmapping) and global/local path planning (via move_base).

Future goals for this project include:

- Use one of the pre-trained object identification networks such as Alex-Net to label salient things (e.g. furniture). This will allow the rover to perform useful tasks, like reminding me where I left my couch.

- Experiment with a different motion model in the particle filter--train a small neural network representation for the robot dynamics to update particles, reviving old neural methods adaptive for process control. 1, 2

- Segment the grid map into rooms, likely by using one of several existing map segmentation algorithms (e.g. decomposing the map into a Voronoi graph), then allow destinations to be chosen from a list of rooms rather than requiring goal pose selection on a map. Present the menu of rooms in a small webapp, allowing me to send tiny cargo items around my apartment without firing up Rviz.

- Integrate an accelerometer+gyroscope+magnetometer IMU, both for improved odometry, and detecting abnormal tilt (like when we start to back up a wall).

- For safety, monitor motor current for spikes. In such a situation, we should interrupt the normal planning behaivior to avoid breaking gear teeth.

- Build a charging dock, and choreograph an automated switchover to external power. My power supplies do have enough inertia to handle an abrupt switch in power source without killing the Raspberry Pi, but integrating a LiPo balance charger will be nontrivial. Currently, I plan to use transistors soldered in parallel with buttons on a commercial charger, as the balance chargers available on e.g. Sparkfun don't handle four-cell batteries.

Here's a video of Gunnar autonomously navigating to user-selected waypoints, mapping along the way.

Epilogue

On 2023-02-19, I finally gutted Gunnar and distributed the parts to boxes for future use. RIP.

Entries

LIDAR SLAM Outside 31 January 2017